Tristan Stiller

ECE student at Vanderbilt University building production-grade security infrastructure. Automated threat detection and response pipeline processing live honeypot attacks, with defense-in-depth across 4 VLANs and 14 containerized services.

Lab Overview

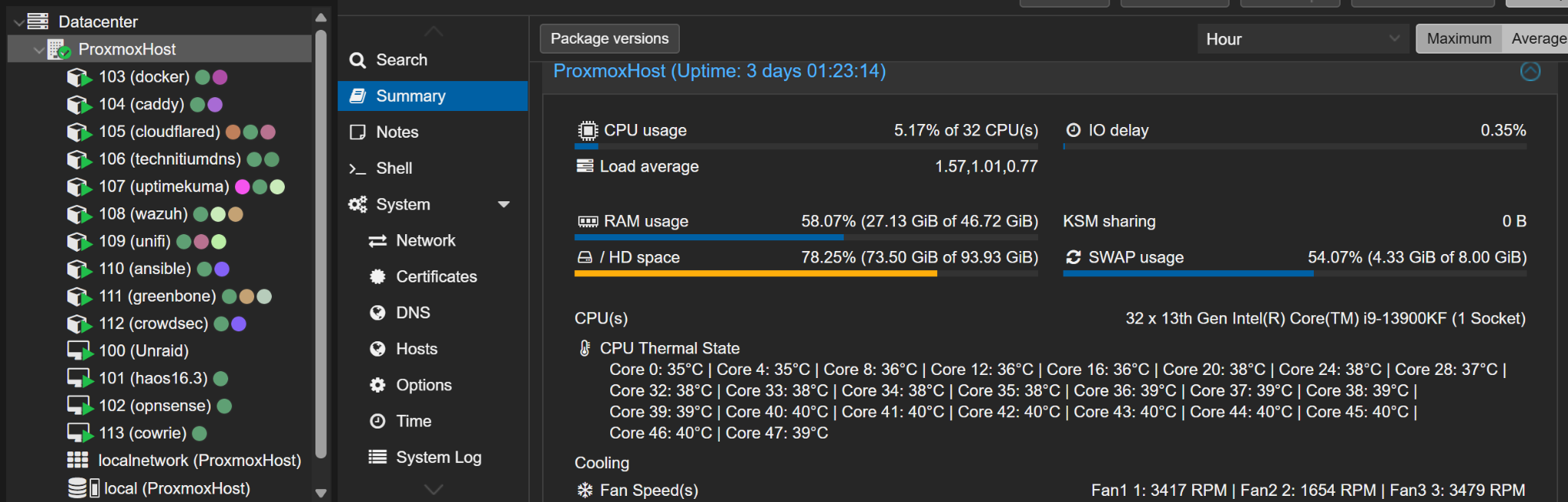

A bare-metal Proxmox hypervisor running 4 VMs and 10 LXC containers with SR-IOV networking, multi-VLAN segmentation, and a full security stack - processing live internet attacks 24/7.

Custom Engineering

The difference between installing software and building systems. These are components I designed, wrote, and debugged from scratch.

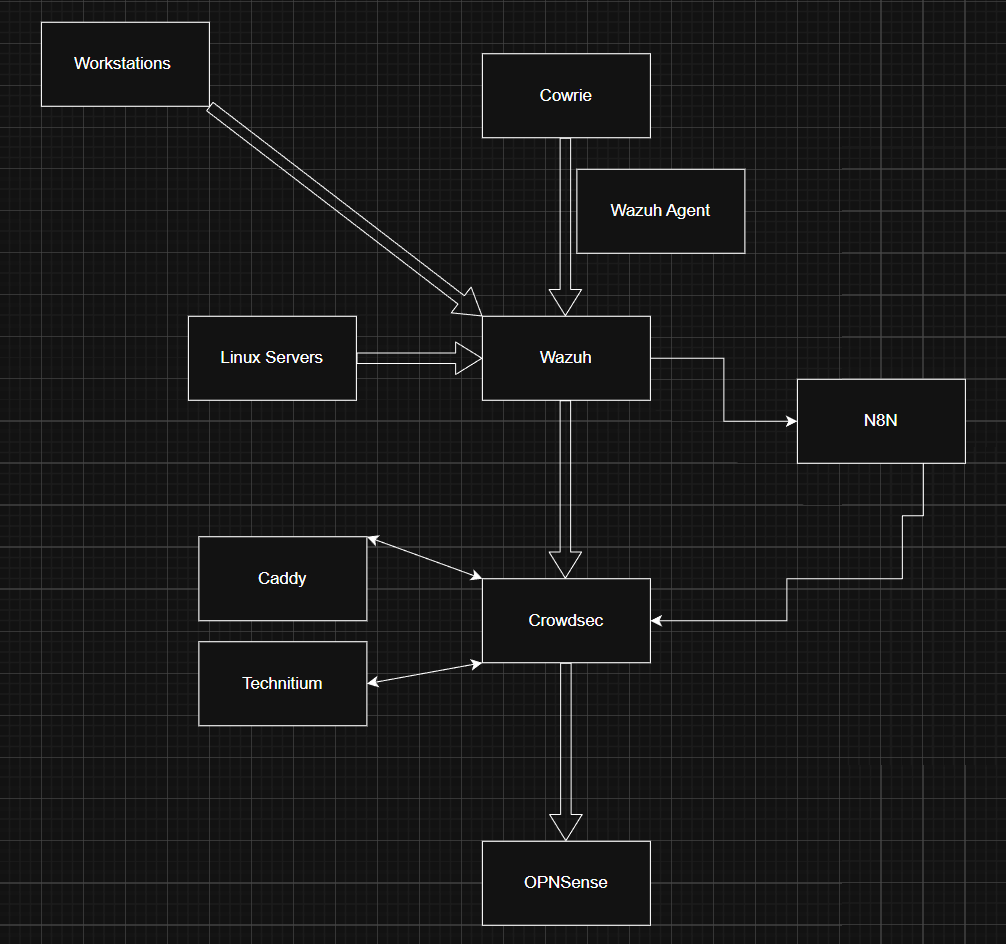

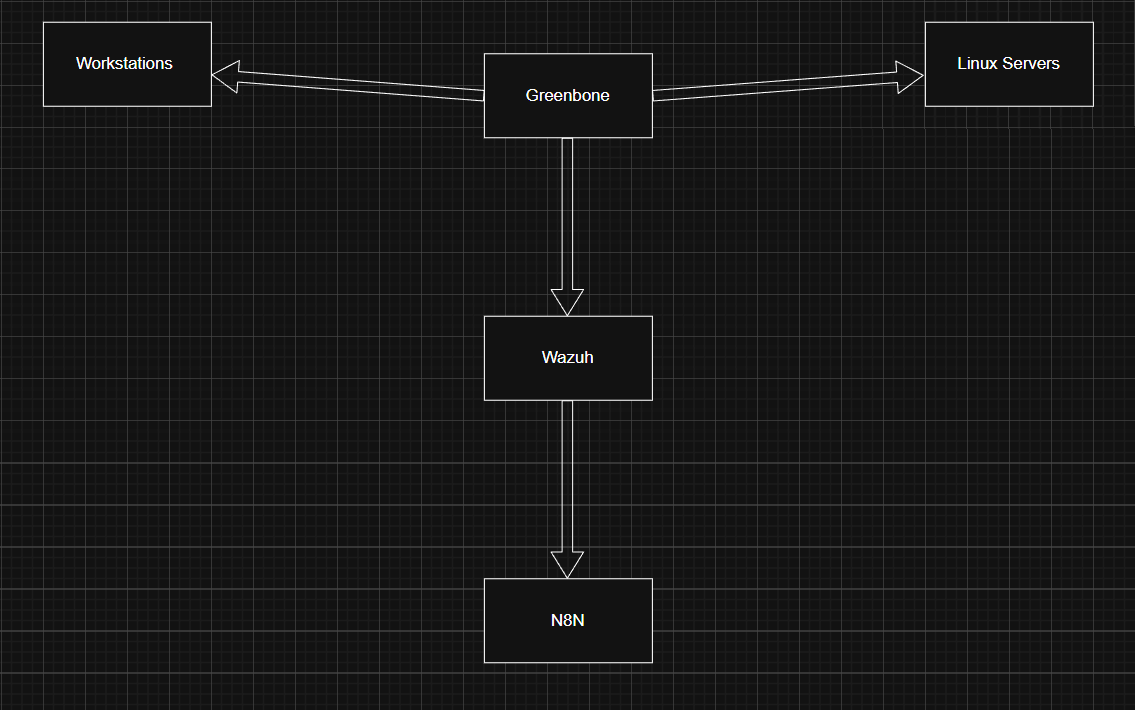

SOAR Pipeline (Wazuh → N8N → CrowdSec → OPNsense)

Custom alert enrichment workflow: Wazuh fires on level 10+ events → N8N enriches with GeoIP → CrowdSec bans for 24h → OPNsense pf table blocks at the firewall. Dual-blocking with automatic expiry sync.

AI-Augmented Honeypot

Local LLM (qwen3:14b via Ollama) generates realistic Debian 7 shell responses for unknown commands. Attackers see fake crontabs, Docker errors, and system info instead of "command not found."

22 Custom Wazuh Detection Rules

Hand-written rules for Cowrie (100050-100061), Authentik SSO (100100-100108), and Caddy access logs (100200-100204). Static field mapping, JSON decoder integration, evaluation ordering.

Daily Security Digest

Automated 8AM briefing via N8N cron: queries Wazuh API for alert counts, top attacker IPs, firewall state table, and CrowdSec ban summary. Posts to Discord with severity-colored embeds.

IPv6 Dual-Stack with ULA VLANs

DHCPv6-PD from Comcast (public /64 on LAN) + ULA fdc8:7f3a:1e2b::/48 for VLANs. Custom radvd patch script + boot hook to survive dhcp6c renewals that wipe VLAN stanzas.

Docker Crash Watcher

Custom systemd service monitoring Docker container exits. Posts Discord embeds on non-zero exit codes with 10-minute per-container cooldown to prevent alert storms. Ignores graceful stops (SIGTERM).

Design Philosophy

Detection → Enrichment → Response Pipeline

A fully automated SOAR pipeline that detects attacks on the honeypot, enriches alerts with threat intelligence, and blocks malicious IPs at the firewall - all within 30 seconds, with no human intervention required.

Public Internet

WAN:22 → DMZ

VLAN 99 (DMZ)

JSON Log Ingestion

Rule Matching

Enrichment + Routing

24h Ban Decision

WAN Block Rule

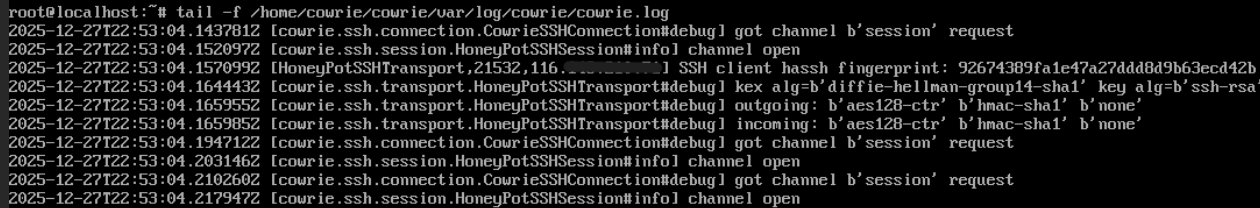

🍯 Stage 1: Cowrie SSH Honeypot + AI Deception

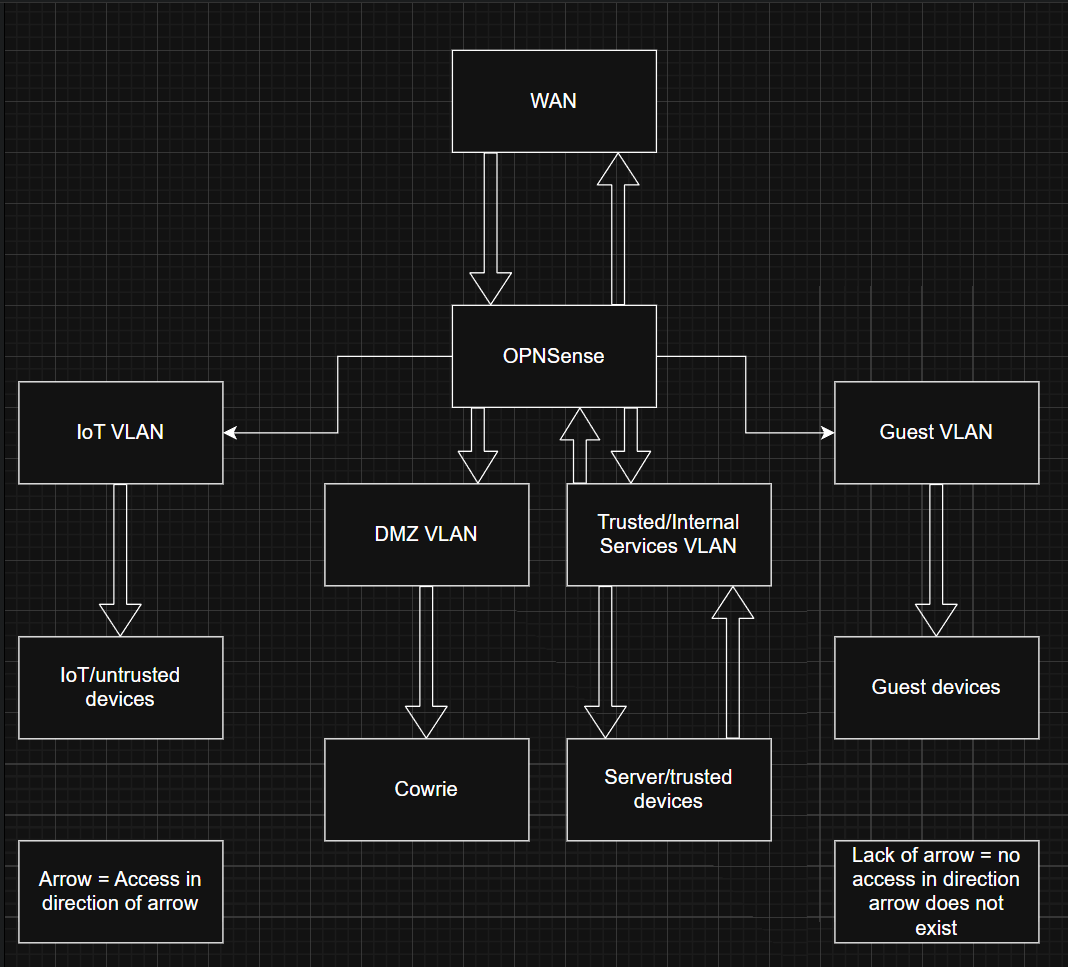

A medium-interaction SSH honeypot in an isolated DMZ (VLAN 99) with public SSH (WAN port 22) redirected via OPNsense NAT. Captures credential stuffing, shell commands, and malware drops from real attackers.

- Isolated in DMZ VLAN 99 - no lateral movement to production networks

- AuthRandom authentication: randomly accepts credentials after 3-6 attempts to maximize session depth

- Fake SSH banner:

SSH-2.0-OpenSSH_6.0p1 Debian-4+deb7u2- looks like a vulnerable Debian 7 server - AI-augmented responses - local Ollama (qwen3:14b) generates realistic Debian 7 terminal output for unrecognized commands

- Malware downloads captured to disk, monitored by Wazuh FIM with VirusTotal integration

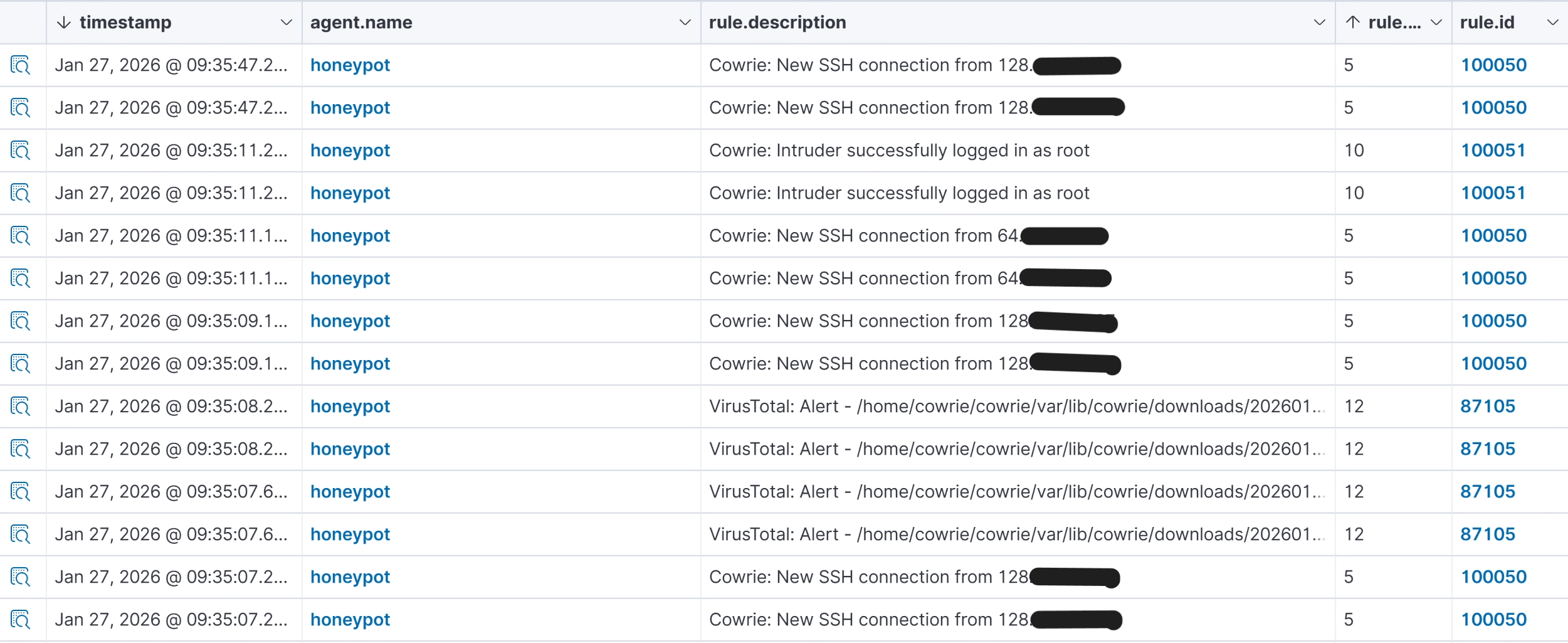

🔍 Stage 2: Wazuh SIEM - Detection Engineering

22 custom rules across 3 rule files process JSON logs from Cowrie, Authentik SSO, and Caddy reverse proxy. The native JSON decoder extracts fields; rules use static field tags and ordered evaluation for precise matching.

- Cowrie rules (100050-100061): connection, login success/fail, commands, file download/upload, brute-force frequency, dangerous commands (wget/curl/reverse shells), cryptominer detection, persistence attempts

- Authentik rules (100100-100108): auth failures, brute-force (5 in 60s), flow execution, admin API mutations, 401/403 responses, worker task failures

- Caddy rules (100200-100204): 4xx/5xx errors, 403 blocks (CrowdSec), web scanning detection (10+ 4xx in 30s)

- Level 10+ alerts automatically trigger the N8N SOAR pipeline

- Level 12 FIM events (malware artifacts) trigger VirusTotal hash lookup

⚡ Stage 3: N8N SOAR - Enrichment & Auto-Block

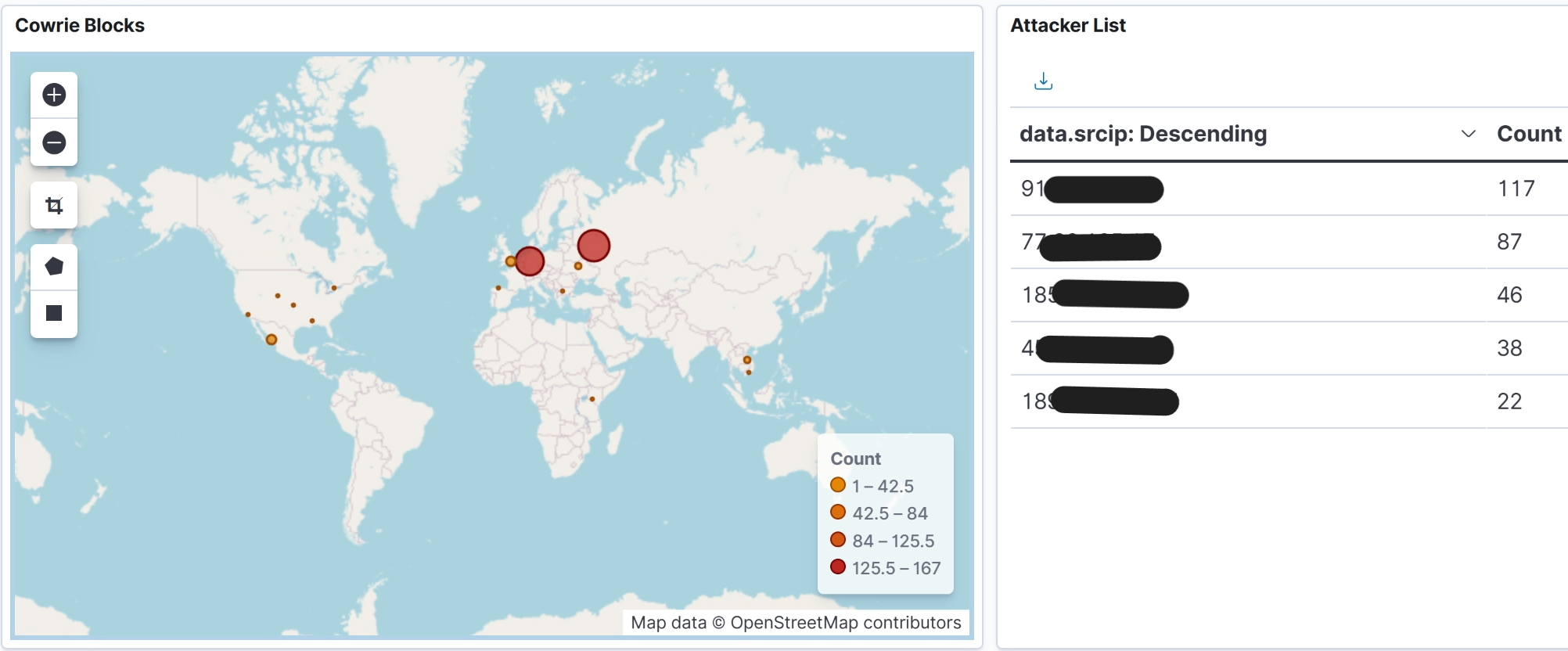

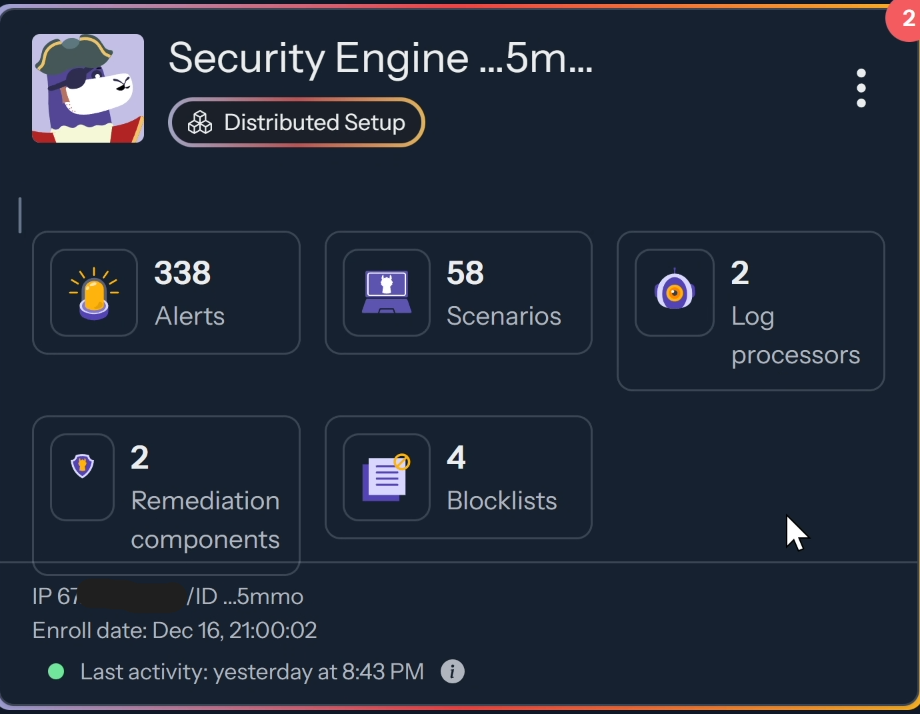

Three custom N8N workflows process Wazuh alerts in real time, enrich them with threat intelligence, and execute automated response actions.

- Alert Enrichment + Auto-Block: receives webhook from Wazuh integratord → enriches with ipwho.is GeoIP → categorizes by severity/type → posts color-coded Discord embed → triggers CrowdSec ban

- CrowdSec Auto-Block: dual-blocks attackers - CrowdSec 24h ban + OPNsense n8n_blocklist pf table (WAN inbound block rule)

- Daily Security Digest: 8AM cron queries Wazuh API for overnight alert summary, top attacking IPs, pf state table stats → Discord briefing

- Blocklist sync cron every 2h: reconciles CrowdSec ban list with OPNsense pf tables, removes expired IPs

Why I Built It This Way

→ Why block at the firewall, not the host?

CrowdSec and OPNsense pf tables enforce bans at the network perimeter. Host-level blocking (iptables, fail2ban) only protects one service - a firewall rule blocks the attacker from reaching anything, including services that don't have their own IPS.

→ Why dual-block (CrowdSec + OPNsense pf)?

CrowdSec bans expire automatically (24h TTL). OPNsense alias config persists across reboots but pf tables don't auto-populate from aliases. The 2-hour sync cron reconciles both - live pf table for immediate blocking, alias config for reboot persistence.

→ Why an AI honeypot?

Standard Cowrie returns "command not found" for ~80% of attacker commands, which is a dead giveaway. The LLM generates plausible Debian 7 output (fake crontabs, Docker errors, system info), keeping attackers engaged longer and capturing more TTPs. Uses qwen3:14b locally - no API costs, no data exfiltration.

→ Why custom Wazuh rules instead of default rulesets?

Default Wazuh rules don't understand Cowrie's JSON schema or Authentik's structured logs. Custom rules with decoded_as: json and field-level matching extract attacker IPs, usernames, and commands directly from the JSON, enabling precise alerting and correlation that generic syslog rules can't achieve.

Security Architecture

A layered security stack with 6 Wazuh agents across 4 VLANs, automated threat response, and continuous vulnerability assessment.

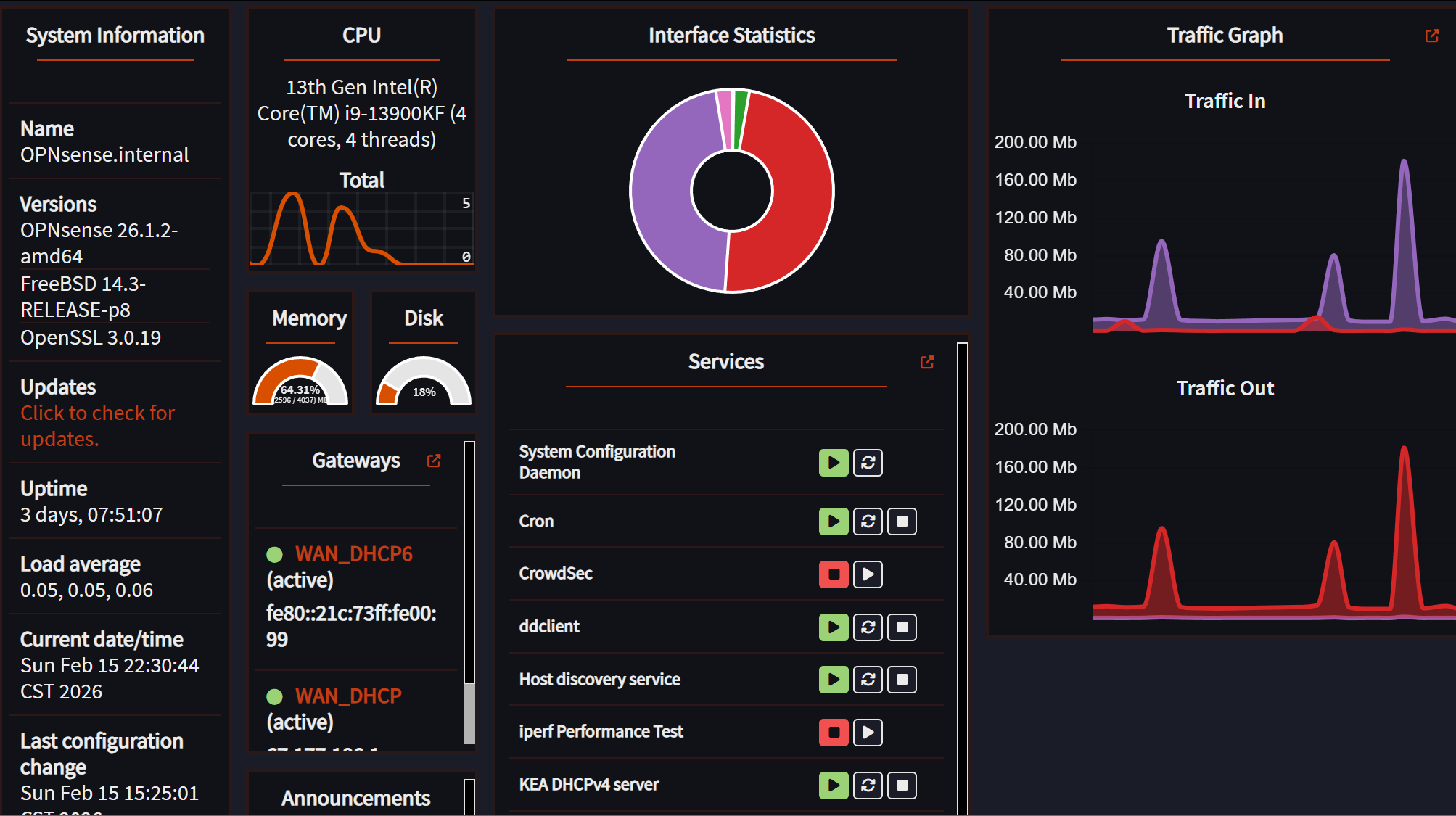

OPNsense Firewall

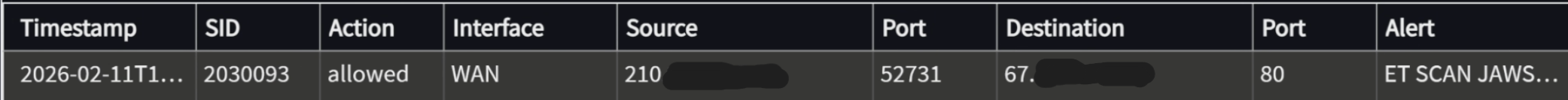

Stateful firewall with Suricata IPS (ET-Pro rules, netmap mode on WAN), multi-VLAN routing, NAT, and DNS interception. Acts as the network's root of trust.

Wazuh SIEM

Centralized SIEM with 6 active agents on Proxmox, Docker, Caddy, OPNsense, Cowrie, and workstation. 22 custom rules, FIM, and VirusTotal integration.

Cowrie Honeypot + AI

SSH honeypot in DMZ VLAN 99 with AI-generated responses via local Ollama LLM. Captures credentials, commands, and malware from real attackers daily.

CrowdSec IPS

Collaborative behavioral IPS. Receives ban decisions from N8N SOAR pipeline and enforces at the OPNsense firewall. 24h TTL with automatic expiry.

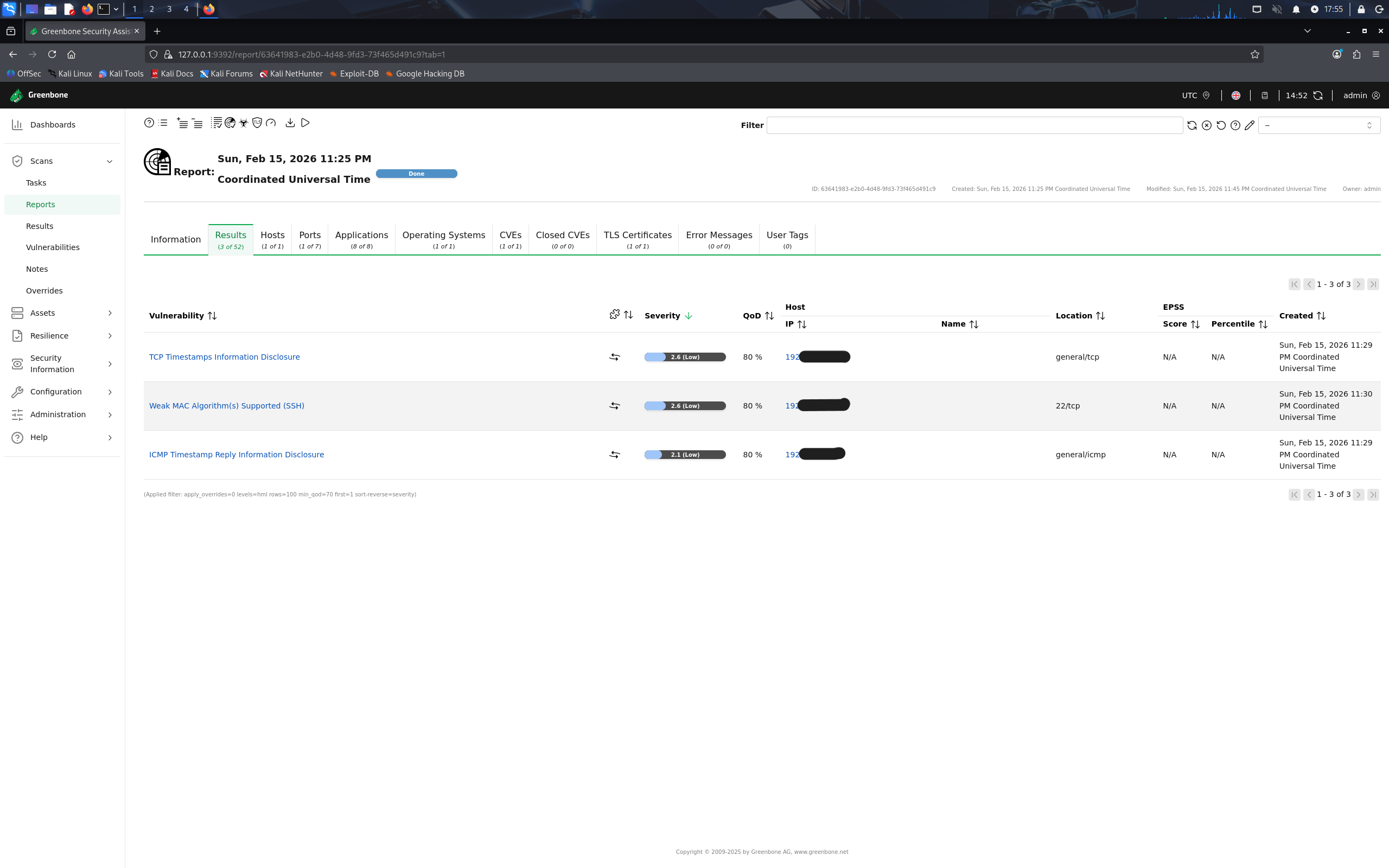

Greenbone Vulnerability Scanner

OpenVAS in a Kali LXC with RAM-constrained sequential scanning (max_hosts=1, max_checks=3). Automated 3x/week via cron - Monday (core infra), Wednesday (Docker), Friday (all LXCs). Results posted to Discord. CT auto-starts for scan, stops after.

Authentik SSO + Cloudflare Access

Domain-level forward auth across 23 services. Cloudflare Access gates external apps with Google MFA; Authentik provides OIDC SSO for all internal services via Caddy.

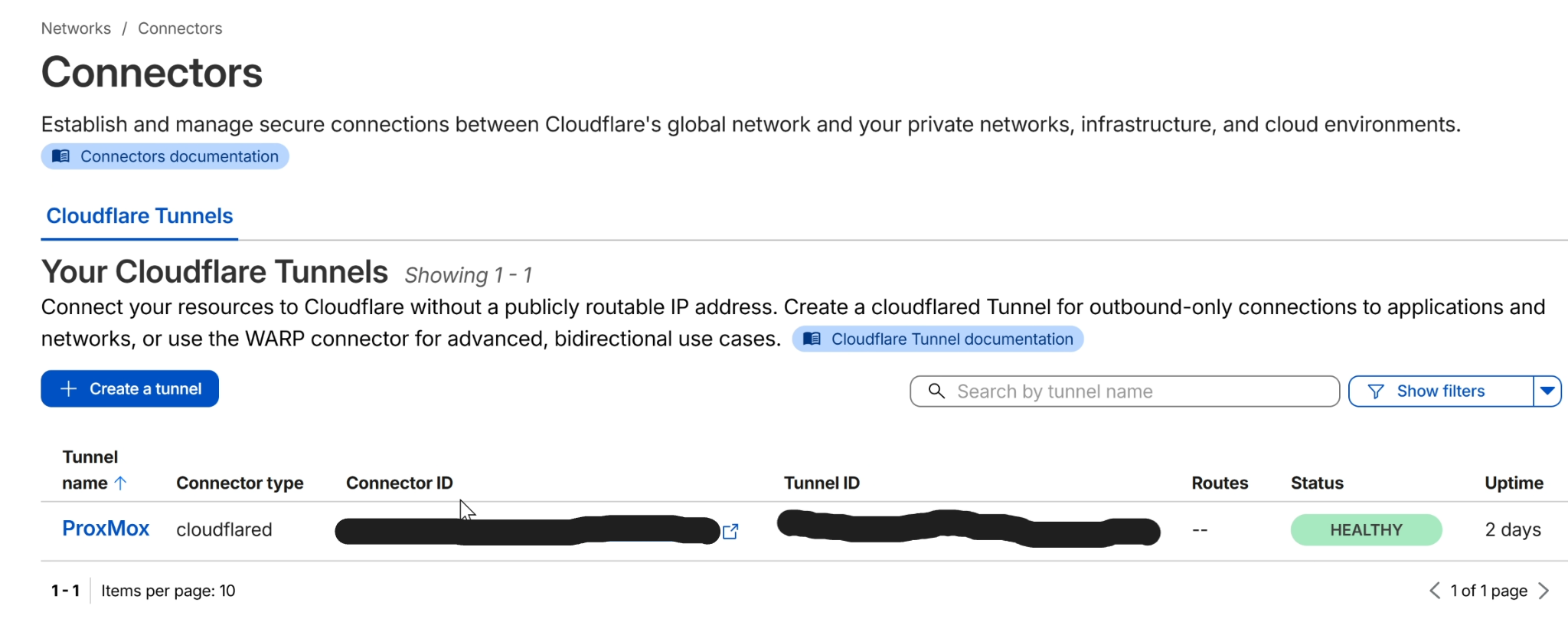

🔐 Access Control Stack

Multi-layer identity and access management with zero-trust principles. No inbound firewall ports - all external access via Cloudflare Tunnels.

- Cloudflare Access enforces Google account allowlisting before any app is reachable

- Authentik SSO provides domain-level forward auth for 23 internal services via Caddy reverse proxy

- OIDC/SAML with embedded outpost - outpost passthrough handles OAuth callbacks without redirect loops

- Vaultwarden for credential management (self-hosted Bitwarden with its own 2FA)

- SSO exclusions for services with native auth: Jellyfin (API key), Vaultwarden (own 2FA), Home Assistant

Advanced Networking

Enterprise-grade networking with SR-IOV, multi-VLAN segmentation, IPv6 dual-stack, hardened DNS, and zero-exposure external access.

⚡ SR-IOV - Mellanox ConnectX-5

16 Virtual Functions on a ConnectX-5 dual-port 25GbE NIC, each assigned directly to VMs and containers for near-native network I/O with hardware-level traffic isolation.

- Each VM/LXC gets a dedicated SR-IOV VF - bypasses the host network stack entirely

- VF-level VLAN tagging enforced at the PF for DMZ isolation (VLAN 99)

- Trust and spoofcheck configured per-VF via Proxmox hookscripts for persistence across reboots

- PCIe passthrough for OPNsense (full NIC control) and Unraid (SATA controller)

🌐 IPv6 Dual-Stack

Full IPv6 deployment with Comcast DHCPv6-PD on LAN and ULA addressing for VLANs - a non-trivial configuration given ISP constraints.

- WAN: public /128 via DHCPv6; LAN: public /64 via track6 (Comcast only delegates /64, not /60)

- VLANs use ULA

fdc8:7f3a:1e2b::/48since the /64 can't be subdivided for multiple subnets - SLAAC via radvd on all interfaces; managed flag (M=1) on LAN for DHCPv6, pure SLAAC (M=0) on VLANs

- Custom patch script + boot hook to survive dhcp6c PD renewals that regenerate radvd.conf and wipe VLAN stanzas

4 VLAN Segmentation

Production (default), IoT (VLAN 30), Guest (VLAN 40), DMZ (VLAN 99). Inter-VLAN routing only through OPNsense with explicit per-service allow rules. Default-deny between all zones.

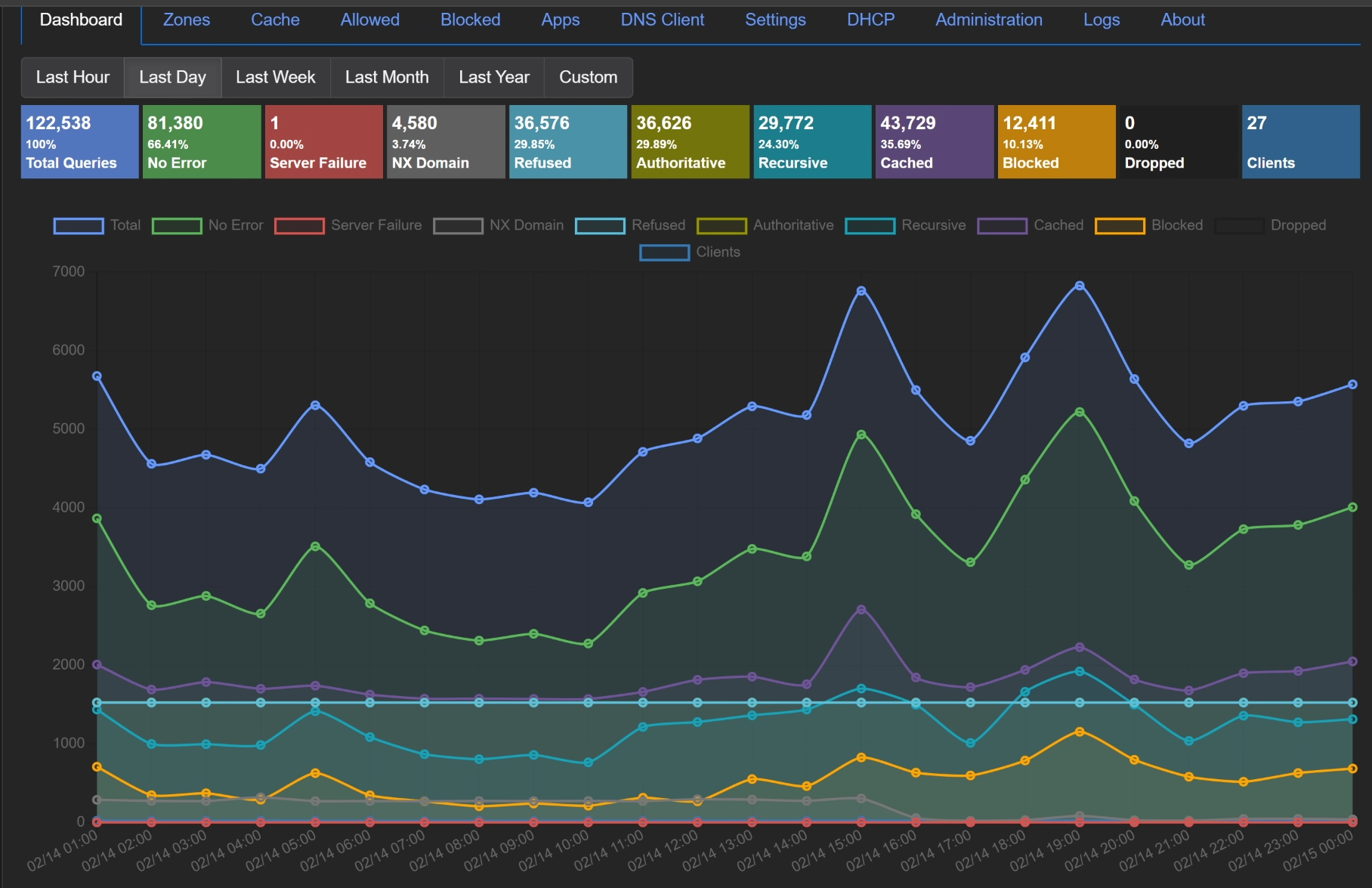

Technitium DNS - Hardened

Self-hosted recursive DNS with DoH/DoT upstream. OPNsense intercepts ALL DNS queries (port 53/853) and redirects to Technitium - no client can bypass it. Full DNS visibility across all VLANs.

Cloudflare Tunnels + Caddy

Outbound-only tunnels replace port forwarding. Caddy reverse proxy with ZeroSSL wildcard cert (*.101904.xyz) routes to all internal services. Zero inbound firewall exposure.

UniFi Enterprise Wireless

Managed access points with SSID-to-VLAN mapping, client isolation, and centralized controller for configuration and monitoring.

Automation, IaC & Monitoring

Infrastructure-as-code, workflow orchestration, automated monitoring, and Git-backed configuration management.

Ansible - Infrastructure as Code

Configuration management playbooks for LXC containers and host-level settings. Package installation, service configuration, and security baselines - all version-controlled.

N8N - SOAR Workflows

3 active SOAR workflows: Alert Enrichment + Auto-Block, CrowdSec Dual-Block, and Daily Security Digest. Webhook-triggered by Wazuh integratord for real-time response.

Prometheus + Grafana + Exportarr

Time-series monitoring with Prometheus scraping node-exporter (host metrics) and Exportarr (Sonarr/Radarr/Prowlarr). Grafana dashboards for resource utilization and service health.

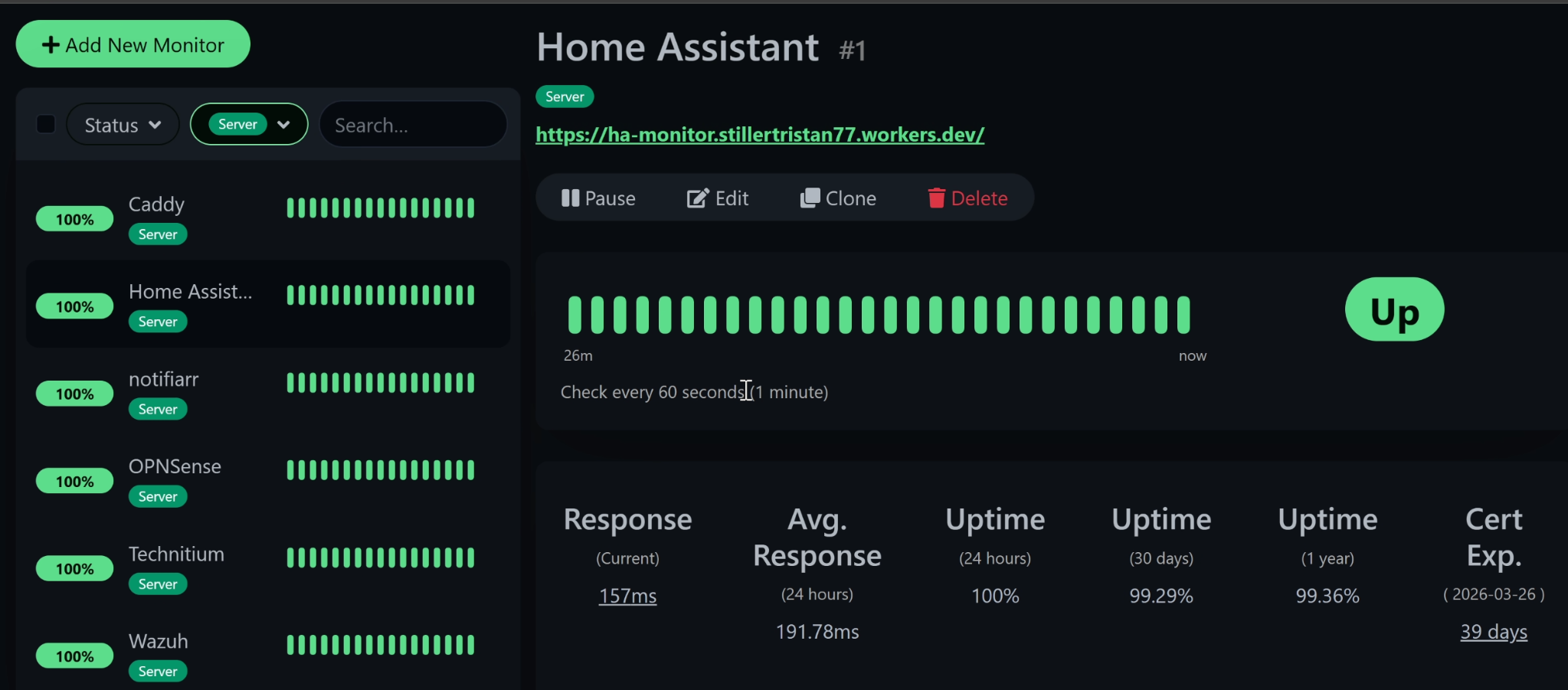

Uptime Kuma

21 monitors covering every service endpoint. HTTP/HTTPS checks, TCP port probes, and DNS resolution tests with alerting on degradation.

Git Config Backup

Private GitHub repo backing up all critical configs: Caddyfile, Wazuh rules/decoders, OPNsense config.xml, compose files, Cowrie plugins. Weekly automated push via cron.

Database Layer

PostgreSQL (Authentik, Wiki.js), MariaDB (media apps), Redis (caching), SQLite (N8N, Uptime Kuma). Each workload uses the appropriate data store.

Infrastructure

Bare-metal Proxmox VE hypervisor with PCIe passthrough, SR-IOV networking, GPU acceleration, and ordered boot sequencing.

🖥️ Proxmox VE - Single-Node Hypervisor

Intel i9-13900KF bare-metal running 4 QEMU/KVM VMs and 10 LXC containers with UEFI boot, PCIe passthrough, and deterministic startup ordering.

- Boot order: Cowrie → OPNsense → DNS → Cloudflared → CrowdSec (15s buffer) → Caddy → Docker → UniFi → Wazuh → Home Assistant → Uptime Kuma → Ansible → Unraid

- GPU passthrough: Intel ARC A380 to Docker LXC for hardware-accelerated transcoding

- SR-IOV: 16 VFs on ConnectX-5, each assigned to a VM/LXC - no software bridge

- USB passthrough for Zigbee/Z-Wave controllers to Home Assistant

- All VMs/CTs set to

onboot: 1- full lab recovers unattended after power loss

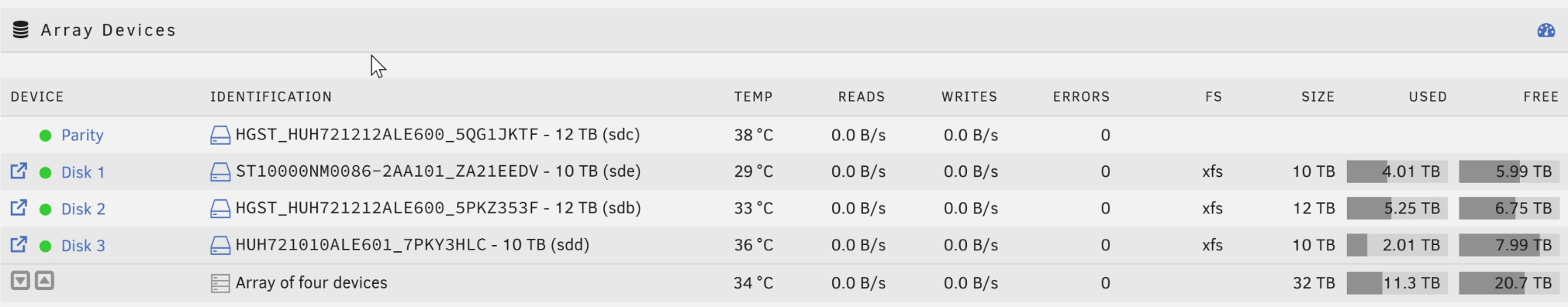

Unraid NAS

Network-attached storage VM with parity-protected array. NFS/SMB exports mounted into Docker LXC for media and compute workloads. SATA controller passed through via PCIe.

Docker LXC - 37 Containers

Privileged LXC with 16 cores, 24GB RAM, GPU passthrough, and Unraid NFS mounts. Runs 37 containers including all media, monitoring, SSO, and automation stacks.

NVIDIA BlueField-2 DPU

Data Processing Unit for hardware-accelerated networking experimentation. SmartNIC capabilities with OVS offload and isolated management plane. Currently in standalone testing - integration planned alongside SR-IOV.

Mellanox ConnectX-5

Dual-port 25GbE NIC with 16 SR-IOV Virtual Functions. Each VF assigned to a VM/LXC for line-rate networking without software bridge overhead.

Home Assistant + Alexa

Smart home automation with a custom AWS Lambda skill bridging Alexa voice control to on-premise Home Assistant - demonstrating hybrid cloud/edge patterns.

Utilities

IT Tools, CyberChef, Stirling PDF, Wiki.js, Adminer, Notifiarr - operational tools for day-to-day administration and incident investigation.